A few years ago, I was in Syracuse for a family trip to the zoo. Syracuse is about 50 miles from where I live and it has a very nice little zoo.

One year was particularly exciting because a Trader Joe’s just opened in Syracuse. We don’t have one where we live* (sadly!) so we always stock up on our favorite specialty groceries when we’re near a Trader Joe’s.

On this particular trip, though, we had an unwelcome surprise. My credit card card company believed my Trader Joe’s spree was fraudulent and declined the transaction. I got a notice on my phone and was able to fix it right away, so it wasn’t the big inconvenience it could have been.

But this led us to wonder what it was about the transaction that led the bank to believe it was fraudulent. Do credit card thieves often skip town and go grocery shopping?

The bank was clearly betting so. It must have a statistical model for aspects of a transaction that are likely enough to be fraudulent that it shuts it down.

With any model, though, you’re never going to to hit 100% accuracy. And if you’re wrong, there’s a tradeoff between tightening standards to catch the thieves and annoying your customers.

Measuring Accuracy of Model Predictions

There are many ways to measure how well a statistical model predicts a binary outcome. Three very common measures are accuracy, sensitivity, and specificity.

These aren’t the only ways to do it. If you’re in a field like data science, you might be more familiar with terms like recall and precision. Here I’m focusing on sensitivity and specificity because they’re the building blocks of ROC Curves.

All of these concepts apply to any predictive model with a binary outcome. Logistic regression, probit regression, a single medical diagnostic test, CART, random forests, etc.

Accuracy is one of those rare terms in statistics that means just what we think it does, but sensitivity and specificity are a little more complicated. To understand all three, first we have to consider the situation of predicting a binary outcome.

The basic situation is this: for each trial (in our example, each transaction), there is only one true outcome: a Positive or a Negative. In my example, we’ll assume a Positive is a stolen credit card. That’s what the bank is on the lookout for.

And of course we need the model to predict the outcome better than randomly guessing. Imagine if your credit card transactions were randomly declined for fraud. You would stop using the card.

Understanding the Confusion Matrix

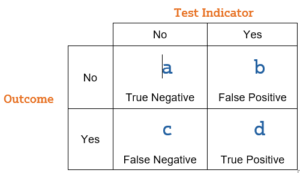

So here is a little table of all the possible situations:

This table has the fancy name of a confusion matrix, but you can see that it’s pretty simple.

The Test Indicator is whatever process we’re using to predict whether each individual is a Yes or a No on the outcome we’re interested in. Does the bank’s model predict a thief?

The Outcome is what actually eventually happens. Was the card really stolen?

For some decisions, those in box a, the model correctly predicted a no. All good. This is a true negative. The customer is using their own card and the bank believes it. The transaction goes through and the family happily snacks on dried mango on the drive home.

Box b is the count of those who were predicted to be Yeses but were actual Nos. Not so good. False positive. The customer isn’t happy that their real grocery shopping gets declined. Only some quick followup on the phone saves them.

Box c is the opposite of b. Those who were predicted to be Nos but were actual Yeses. This one is not so good either. False negative. The thief gets away with a load of risotto and dark chocolate covered almonds.

And finally some transactions were predicted to be Yeses and truly were Yeses. These individuals are all in box d. The thief is shut down. Justice is served.

Accuracy of Models

A perfectly accurate model would put every transaction into boxes a and d. Thieves are stopped but customers are not.

A model that is so bad it’s worthless would have a lot of b’s (angry customers without groceries) and c’s (happy thieves with groceries) and possibly both.

One simple way of measuring Accuracy is simply the proportion of individuals who were correctly classified–the proportions of True Positives and True Negatives.

Accuracy = (a+d)/(a+b+c+d)

This is helpful for sure, but sometimes it matters whether we’re correctly getting a Positive or a Negative correct. It may be worth annoying a few customers to make sure no thieves get away.

Or it might be worth letting a few thieves get away in order to not annoy too many customers.

Another issue is we can generally increase one simply by decreasing the other. This may have important implications but the overall Accuracy rate won’t change.

Or worse, we could improve overall Accuracy just by making the test more able to find the more common category.

So a better approach is to look at the accuracy for Positives and Negatives separately.

What Sensitivity and Specificity measure

These two values are called Sensitivity and Specificity.

Sensitivity = d/(c+d): The proportion of observed positives that were predicted to be positive. In other words, of all the transactions that were truly fraudulent, what percentage did we find?

It’s also called the true positive rate and recall.

Specificity = a/(a+b): The proportion of observed negatives that were predicted to be negatives. In other words, of all the transactions that were legitimate, what percentage did we predict to be so?

It’s also called the true negative rate.

Ideally, the test will result in both being high, but usually there is a tradeoff. Every test needs to pick a threshold for how high a probability of fraud has to be before we call it a fraud. The default in most software is .50, but that’s something you can change.

Lowering that threshold to increase Sensitivity will decrease Specificity and vice versa. It’s important to understand this as you’re choosing that threshold and evaluating a model.

*Hooray! We have our very own Trader Joe’s now about 2 miles from my house.

Originally published June 5, 2015

Updated 2/10/25

This is a nice understandable example. I would add though, that in medical examples, the 2×2 confusion matrix often has the test results in the rows (T+ in row 1, T- in row 2) and the true disease state in the columns (D+ in column 1, D- in column 2). But the letters a, b, c, and d still represent top left, top right, bottom left, and bottom right cells respectively. So, if recast Karen’s table that way, it looks like this:

D+ D-

T+ a b

T- c d

In this arrangement, Sens = a/(a+c) and Spec = d/(d+b)). Therefore, if students (or others) think of Sens and Spec in terms of a, b, c, and d, they will struggle if they encounter a 2×2 confusion matrix with a different layout than the one they learned. For that reason, I advise students to think in terms of conditional probabilities, like this:

Sens = p(T+ | D+)

= d/(c+d) in Karen’s table

= a/(a+c) in my table

Spec = p(T- | D-)

= a/(a+b) in Karen’s table

= d/(b+d) in my table

If students find conditional probabilities too difficult, one can also think in terms of TP, FP, TN, and FN. In Karen’s 2×2 table:

a = TN

b = FP

c = FN

d = TP

In my table, on the other hand:

a = TP

b = FP

c = FN

d = TN

But in all possible table layouts:

Sens = TP/(TP+FN)

Spec = TN/(TN+FP)

The overall point I am trying to make is that we should encourage students to think about diagnostic test properties in terms of the *general concepts* rather than in terms of the letters that are used to represent the cell counts. The meanings of the letters a to d change when the layout of the table changes. But the general concepts remain the same.

Great way of explaining the concepts – clear and easy to understand!!

Ty very much for this very clear explanation. I have seen explanations of sensitivity and specificity, be all over the place in many articles.

very clear explanation . Trader Joe’s example is incredible. Kind of revises my concepts every time I go there 🙂 > I hope they open a new store in your neighborhood

Incredibly useful and logical explanation!

At last! A logical mind and a clear writer. This explanation (like so many others on the site) is incredibly useful.