Previous Posts

SPSS has the Count Values within Cases option, but R does not have an equivalent function. Here are two functions that you might find helpful, each of which counts values within cases inside a rectangular array...

Combining the length() and which() commands gives a handy method of counting elements that meet particular criteria...

Why does ANOVA give main effects in the presence of interactions, but Regression gives marginal effects? What are the advantages and disadvantages of dummy coding and effect coding? When does it make sense to use one or the other? How does each one work, really?

Why We Needed a Random Sample of 6 numbers between 1 and 10000 As you may have read in one of our recent newsletters, this month The Analysis Factor hit two milestones: 10,000 subscribers to our mailing list 6 years in business. We’re quite happy about both, and seriously grateful to all members of our […]

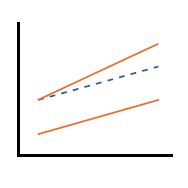

Not too long ago, a client asked for help with using Spotlight Analysis to interpret an interaction in a regression model. Spotlight Analysis? I had never heard of it. As it turns out, it’s a (snazzy) new name for an old way of interpreting an interaction between a continuous and a categorical grouping variable in a regression model...

A central concept in statistics is level of measurement of variables. It's so important to everything you do with data that it's usually taught within the first week in every intro stats class. But even something so fundamental can be tricky once you start working with real data...

We continue with the same glm on the mtcars data set (regressing the vs variable on the weight and engine displacement). Now we want to plot our model, along with the observed data. Although we ran a model with multiple predictors, it can help interpretation to plot the predicted probability that vs=1 against each predictor separately. So first we fit

While parametric regression models like linear and logistic regression are still the mainstay of statistical modeling, they are not the only, nor always the best, approach to predicting outcome variables. Classification and Regression Trees (CART) are a nonparametric approach to using values of predictors to find good predictions of values of a response variable.

In the last article, we saw how to create a simple Generalized Linear Model on binary data using the glm() command. We continue with the same glm on the mtcars data set

Ordinary Least Squares regression provides linear models of continuous variables. However, much data of interest to statisticians and researchers are not continuous and so other methods must be used to create useful predictive models. The glm() command is designed to perform generalized linear models (regressions) on binary outcome data, count data, probability data, proportion data and many other data types. In this blog post, we explore the use of R’s glm() command on one such data type. Let’s take a look at a simple example where we model binary data.

stat skill-building compass

stat skill-building compass