Previous Posts

Multinomial Logistic Regression The multinomial (a.k.a. polytomous) logistic regression model is a simple extension of the binomial logistic regression model. They are used when the dependent variable has more than two nominal (unordered) categories. Dummy coding of independent variables is quite common. In multinomial logistic regression the dependent variable is dummy coded into multiple 1/0 […]

I first encountered the Great Likert Data Debate in 1992 in my first statistics class in my psychology graduate program. My stats professor was a brilliant mathematical psychologist and taught the class unlike any psychology grad class I’ve ever seen since. Rather than learn ANOVA in SPSS, we derived the Method of Moments using Matlab. […]

SPSS Variable Labels and Value Labels are two of the great features of its ability to create a code book right in the data set. Using these every time is good data analysis practice. SPSS doesn’t limit variable names to 8 characters like it used to, but you still can’t use spaces, and it will […]

There are many dependent variables that no matter how many transformations you try, you cannot get to be normally distributed. The most common culprits are count variables–the variable that measures the count or rate of some event in a sample. Some examples I’ve seen from a variety of disciplines are: Number of eggs in a […]

The beauty of the Univariate GLM procedure in SPSS is that it is so flexible. You can use it to analyze regressions, ANOVAs, ANCOVAs with all sorts of interactions, dummy coding, etc. The down side of this flexibility is it is often confusing what to put where and what it all means. So here’s a […]

I once had a client from engineering. This is pretty rare, as I usually work with social scientists and biologists. And despite the fact that I was an engineering major for my first two semesters in college, I generally don’t understand a thing engineers talk about. But I digress. In this consultation, we had gotten […]

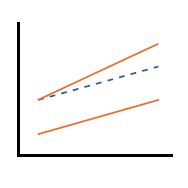

One of the most common causes of multicollinearity is when predictor variables are multiplied to create an interaction term or a quadratic or higher order terms (X squared, X cubed, etc.). Why does this happen? When all the X values are positive, higher values produce high products and lower values produce low products. So the […]

I was recently asked about whether centering (subtracting the mean) a predictor variable in a regression model has the same effect as standardizing (converting it to a Z score). My response: They are similar but not the same. In centering, you are changing the values but not the scale. So a predictor that is centered […]

Circular variables, which indicate direction or cyclical time, can be of great interest to biologists, geographers, and social scientists. The defining characteristic of circular variables is that the beginning and end of their scales meet. For example, compass direction is often defined with true North at 0 degrees, but it is also at 360 degrees, […]

Statistical models, such as general linear models (linear regression, ANOVA, MANOVA), linear mixed models, and generalized linear models (logistic, Poisson, regression, etc.) all have the same general form. On the left side of the equation is one or more response variables, Y. On the right hand side is one or more predictor variables, X, and […]

stat skill-building compass

stat skill-building compass