Mediators can affect the relationship between X and Y. Moderators can affect the scale and magnitude of that relationship. And sometimes the mediators and moderators affect each other.

Mediators can affect the relationship between X and Y. Moderators can affect the scale and magnitude of that relationship. And sometimes the mediators and moderators affect each other.

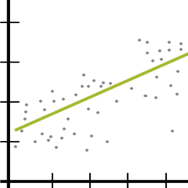

Linear regression with a continuous predictor is set up to measure the constant relationship between that predictor and a continuous outcome.

This relationship is measured in the expected change in the outcome for each one-unit change in the predictor.

One big assumption in this kind of model, though, is that this rate of change is the same for every value of the predictor. It’s an assumption we need to question, though, because it’s not a good approach for a lot of relationships.

Segmented regression allows you to generate different slopes and/or intercepts for different segments of values of the continuous predictor. This can provide you with a wealth of information that a non-segmented regression cannot.

In this webinar, we will cover (more…)

In a simple linear regression model, how the constant (a.k.a., intercept) is interpreted depends upon the type of predictor (independent) variable.

In a simple linear regression model, how the constant (a.k.a., intercept) is interpreted depends upon the type of predictor (independent) variable.

If the predictor is categorical and dummy-coded, the constant is the mean value of the outcome variable for the reference category only. If the predictor variable is continuous, the constant equals the predicted value of the outcome variable when the predictor variable equals zero.

When your predictor variable X is categorical, the results are logical. Let’s look at an example. (more…)

The concept of a statistical interaction is one of those things that seems very abstract. Obtuse definitions, like this one from Wikipedia, don’t help:

In statistics, an interaction may arise when considering the relationship among three or more variables, and describes a situation in which the simultaneous influence of two variables on a third is not additive. Most commonly, interactions are considered in the context of regression analyses.

First, we know this is true because we read it on the internet! Second, are you more confused now about interactions than you were before you read that definition? (more…)

Like the chicken and the egg, there’s a question about which comes first: run a model or check assumptions? Unlike the chicken’s, the model’s question has an easy answer.

There are two types of model assumptions in a statistical model. Some are distributional assumptions about the errors. Examples include independence, normality, and constant variance in a linear model.

Others are about the form of the model. They include linearity and (more…)

Others are about the form of the model. They include linearity and (more…)

Why is it we can model non-linear effects in linear regression?

What the heck does it mean for a model to be “linear in the parameters?” (more…)