Have you ever compared the list of model assumptions for linear regression across two sources? Whether they’re textbooks, lecture  notes, or web pages, chances are the assumptions don’t quite line up.

notes, or web pages, chances are the assumptions don’t quite line up.

Why? Sometimes the authors use different terminology. So it just looks different.

And sometimes they’re including not only model assumptions, but inference assumptions and data issues. All are important, but understanding the role of each can help you understand what applies in your situation.

Model Assumptions

The actual model assumptions are about the specification and performance of the model for estimating the parameters well.

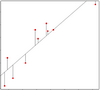

1. The errors are independent of each other

2. The errors are normally distributed

3. The errors have a mean of 0 at all values of X

4. The errors have constant variance

5. All X are fixed and are measured without error

6. The model is linear in the parameters

7. The predictors and response are specified correctly

8. There is a single source of unmeasured random variance

Not all of these are always explicitly stated. And you can’t check them all. How do you know you’ve included all the “correct” predictors?

But don’t skip the step of checking what you can. And for those you can’t, take the time to think about how likely they are in your study. Report that you’re making those assumptions.

Assumptions about Inference

Sometimes the assumption is not really about the model, but about the types of conclusions or interpretations you can make about the results.

These assumptions allow the model to be useful in answering specific research questions based on the research design. They’re not about how well the model estimates parameters.

Is this important? Heck, yes. Studies are designed to answer specific research questions. They can only do that if these inferential assumptions hold.

But if they don’t, it doesn’t mean the model estimates are wrong, biased, or inefficient. It simply means you have to be careful about the conclusions you draw from your results. Sometimes this is a huge problem.

But these assumptions don’t apply if they’re for designs you’re not using or inferences you’re not trying to make. This is a situation when reading a statistics book that is written for a different field of application can really be confusing. They focus on the types of designs and inferences that are common in that field.

It’s hard to list out these assumptions because they depend on the types of designs that are possible given ethics and logistics and the types of research questions. But here are a few examples:

1. ANCOVA assumes the covariate and the IV are uncorrelated and do not interact. (Important only in experiments trying to make causal inferences).

2. The predictors in a regression model are endogenous. (Important for conclusions about the relationship between Xs and Y where Xs are observed variables).

3. The sample is representative of the population of interest. (This one is always important!)

Data Issues that are Often Mistaken for Assumptions

And sometimes the list of assumptions includes data issues. Data issues are a little different.

They’re important. They affect how you interpret the results. And they impact how well the model performs.

But they’re still different. When a model assumption fails, you can sometimes solve it by using a different type of model. Data issues generally stay around.

That’s a big difference in practice.

Here are a few examples of common data issues:

1. Small Samples

2. Outliers

3. Multicollinearity

4. Missing Data

5. Truncation and Censoring

6. Excess Zeros

So check for these data issues, deal with them if the solution doesn’t create more problems than you solved, and be careful with the inferences you draw when you can’t.

The series in this statistics are great. When complemented with the free webinars, we simply express deep appreciation to the Team. This is a classic education subtype with heavy impact in clearing errors from non experts like us. We than you Karen and Team

Very informative and useful for practicing Statisticians.

Not sure, but I think we no longer need to rely on model linearity in the parameters. See ‘A Modern Gauss-Markov Theorem’ (Hansen 2021 in Econometrica).

I don’t believe that is the case. The Modern Gauss-Markov Theorem states that under i.i.d sampling, the covariance matrix of the OLS estimator is a lower bound for any unbiased estimator of B, whether that estimator is linear or not.

In fact, it provides more evidence that a linear regression model has nice statistical properties.

Dear Karen,

Thank you for these short but very thoughtful write-ups you come up with from time to time. I find them very useful to help solidify our scanty knowledge in statistics and modelling. I know that these articles are informed by your experiences with learners and what they grapple with most. You are spot on! Please keep them coming.