Previous Posts

Question: Can you use Principal Component Analysis with a Training Set Test Set Model? Answer: Yes and no. Principal Component Analysis specifically could be used with a training and test data set, but it doesn't make as much sense as doing so for Factor Analysis. That's because PCA is really just about creating an index variable from a set of correlated predictors.

Question: Can we use PCA for reducing both predictors and response variables? In fact, there were a few related but separate questions about using and interpreting the resulting component scores, so I'll answer them together here.

One of the many confusing issues in statistics is the confusion between Principal Component Analysis (PCA) and Factor Analysis (FA). They are very similar in many ways, so it’s not hard to see why they’re so often confused. They appear to be different varieties of the same analysis rather than two different methods. Yet there is a fundamental difference between them that has huge effects on how to use them.

Question: In Principal Component Analysis, can loadings be both positive and negative? Answer: Yes. Recall that in PCA, we are creating one index variable (or a few) from a set of variables. You can think of this index variable as a weighted average of the original variables. The loadings are the weights. The goal of the PCA is to come up with optimal weights. "Optimal" means we're capturing as much information in the original variables as possible, based on the correlations among those variables.

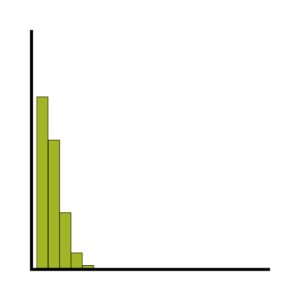

Let’s imagine you have been asked to determine the factors that will help a hospital determine the length of stay in the intensive care unit (ICU) once a patient is admitted. The hospital tells you that once the patient is admitted to the ICU, he or she has a day count of one. As soon as they spend 24 hours plus 1 minute, they have stayed an additional day. Clearly this is count data. There are no fractions, only whole numbers.

One of the biggest challenges that data analysts face is communicating statistical results to our clients, advisors, and colleagues who don’t have a statistics background. Unfortunately, the way that we learn statistics is not usually the best way to communicate our work to others, and many of us are left on our own to navigate what is arguably the most important part of our work.

In a previous article, we discussed how incidence rate ratios calculated in a Poisson regression can be determined from a two-way table of categorical variables. Statistical software can also calculate the expected (or predicted) count for each group. Below is the actual and expected count of the number of boys and girls participating and not participating in organized sports.

The coefficients of count model regression tables are shown in either logged form or as incidence rate ratios. Trying to explain the coefficients in logged form can be a difficult process. Incidence rate ratios are much easier to explain. You probably didn’t realize you’ve seen incidence rate ratios before, expressed differently.

The normal distribution is so ubiquitous in statistics that those of us who use a lot of statistics tend to forget it's not always so common in actual data. And since the normal distribution is continuous, many people describe all numerical variables as continuous. I get it: I'm guilty of using those terms interchangeably, too, but they're not exactly the same. Numerical variables can be either continuous or discrete. The difference? Continuous variables can take any number within a range. Discrete variables can only be whole numbers.

If you have count data we use a Poisson model for our analysis, right? The key criterion for using a Poisson model is after accounting for the effect of predictors, the mean must equal the variance. If the mean doesn’t equal the variance then all we have to do is transform the data or tweak the model, correct? Let’s see how we can do this with some real data.

stat skill-building compass

stat skill-building compass