Centering predictor variables is one of those simple but extremely useful practices that is easily overlooked.

It’s almost too simple.

Centering simply means subtracting a constant from every value of a variable. What it does is redefine the 0 point for that predictor to be whatever value you subtracted. It shifts the scale over, but retains the units.

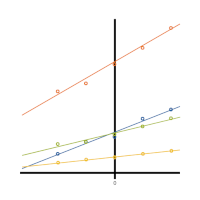

The effect is that the slope between that predictor and the response variable doesn’t change at all. But the interpretation of the intercept does.

The intercept is just the mean of the response when all predictors = 0. So when 0 is out of the range of data, that value is meaningless. But when you center X so that a value within the dataset becomes 0, the intercept becomes the mean of Y at the value you centered on.

What’s the point? Who cares about interpreting the intercept?

What’s the point? Who cares about interpreting the intercept?

It’s true. In many models, you’re not really interested in the intercept. In those models, there isn’t really a point, so don’t worry about it.

But, and there’s always a but, in many models interpreting the intercept becomes really, really important. So whether and where you center becomes important too.

A few examples include models with a dummy-coded predictor, models with a polynomial (curvature) term, and random slope models.

Let’s look more closely at one of these examples.

In models with a dummy-coded predictor, the intercept is the mean of Y for the reference category—the category numbered 0. If ![]() there’s also a continuous predictor in the model, X2, that intercept is the mean of Y for the reference category only when X2=0.

there’s also a continuous predictor in the model, X2, that intercept is the mean of Y for the reference category only when X2=0.

If 0 is a meaningful value for X2 and within the data set, then there’s no reason to center. But if neither is true, centering will help you interpret the intercept.

For example, let’s say you’re doing a study on language development in infants. X1, the dummy-coded categorical predictor, is whether the child is bilingual (X1=1) or monolingual (X1=0). X2 is the age in months when the child spoke their first word, and Y is the number of words in their vocabulary for their primary language at 24 months.

If we don’t center X2, the intercept in this model will be the mean number of words in the vocabulary of monolingual children who uttered their first word at birth (X2=0).

And since infants never speak at birth, it’s meaningless.

A better approach is to center age at some value that is actually in the range of the data. One option, often a good one, is to use the mean age of first spoken word of all children in the data set.

This would make the intercept the mean number of words in the vocabulary of monolingual children for those children who uttered their first word at the mean age that all children uttered their first word.

One problem is that the mean age at which infants utter their first word may differ from one sample to another. This means you’re not always evaluating that mean that the exact same age. It’s not comparable across samples.

So another option is to choose a meaningful value of age that is within the values in the data set. One example may be at 12 months.

Under this option the interpretation of the intercept is the mean number of words in the vocabulary of monolingual children for those children who uttered their first word at 12 months.

The exact value you center on doesn’t matter as long it’s meaningful, holds the same meaning across samples, and within the range of data. You may find that choosing the lowest value or the highest value of age is the best option. It’s up to you to decide the age at which it’s most meaningful to interpret the intercept.

I understood the explanation, but I’m struggling with understanding what the intercept will be when I have multiple numeric variables and multiple dummy variables.

I’ve seen examples where the intercept is added to the beta coefficient of a dummy variable. My question is how do I calculate the intercept when I have multiple numeric and multiple dummy variables, if I want to know the impact of a reference category.

Hi Peter,

When you have multiple predictors, both numeric and dummy, the intercept is the mean of Y when all Xs equal 0. This may or may not be meaningful.

Here are a few free resources we have that you might find helpful:

How to Interpret the Intercept in 6 Linear Regression Examples

Free Craft of Statistical Analysis webinar recording: Interpreting Linear Regression Coefficients

I want to center a variable in a dataset, but for my analyses I need to apply sampling weights. Should I center the variable with the sampling weights applied? Or should I center the data using unweighted data?

Ooh, that’s a good question. Ultimately, you’ll need the mean=0 in the analysis with the sampling weights applied.

Hi there,

So after centering the variables, do we then report the variables with the original variable name or use the new centred variable name? An undergrad struggling. Many thanks

You can do either. The effect is the same. Which ever way communicates the results easiest to your audience is the best way.

Thanks for this helpful page. I understand that I am supposed to mean center my variables first and then multiply them together to create my interaction term. But is it a problem that when I multiply two negative scores, I will have a positive score? If it is not a problem, can you please help me to understand why?

I have no idea when this comment was left, but it’s not a problem, because a positive interaction means they’re both going in the same direction. If you have two negative scores, then that is a positive interaction as they’re both going the same way.

Lower weight -> lower height is really the same thing as higher weight -> higher height

Thank you for this beautiful explanation. I was struggling to understand how a centered and uncentered quadratic model differ and why the linear interaction terms become insignificant. Now I am quite clear. Thanks again.

Should you also centre variables (when appropriate) if using a mixed model as opposed to a regression analysis?

I might not be grasping this correctly. If you “centered” your data so that the mean value of Y would be, for example, at the mean of X (or the mean of all the X’s), then wouldn’t the intercept still be on the left-hand side of the graph? You’d still get an intercept when you run the model, right? which would not be where X=0 in this case, but might for example be where X = -15. How would you interpret this intercept, and could it be statistically significant? Or is there any way to move the Y-axis to the center of the graph so that (in this case) the mean of Y would be where the mean of X is (i.e., the sloped line intersects the crosshairs where both X and Y are at their mean points)?

“The intercept is just the mean of the response when all predictors = 0.” Shouldn’t that be: “The intercept is the value of the response when all predictors = 0.” The intercept isn’t a mean simply because x = 0.

Am I wrong?

Thanks,

Dan.

Hi Dan,

All predicted values in a regression line are conditional means: the mean of Y at a certain value of X.

If all Xs=0, the only coefficient that doesn’t drop out is the intercept. So the intercept is the mean of Y conditional on all Xs =0. It’s not the value of Y as there will (at least theoretically) be many values of Y that aren’t exactly equal to the intercept. But if the assumptions of the model are met, and all X’s can = 0, the intercept should be their mean.

I have output from a polynomial regression and only the second order term seems centered on its mean. I cannot figure out why only the x^2 term is centered and not x. Do you have an easy explanation?

thanks you,

Jeb

Hi Karen,

Perhaps “gloss” was a bit severe. But it seemed to me that you’ve buried the lede. When I teach regression I generally use the centered-regressor form, because that form takes away much of the desire for the intercept to mean something. (Pun intended. Sorry.)

I’m not quite sure what you mean by, “the regression line will go through the data centroid by definition.” This is certainly true for any model that fits a mean (intercept) term. It is true of models that do not fit a mean term only when the data centroid is the origin. That is, when we center all the variables involved in the model.

Dennis

hi Dennis,

Thanks for your comments. Yes, you’re exactly right. I hope I wasn’t glossing over that, because indeed the whole point is that the intercept parameter becomes a meaningful value, with a meaningful p-value, when zero values for all Xs are real values in the data set.

The regression line will go through the data centroid by definition, whether we center X or not. But we won’t have a parameter estimate or p-value for it.

Karen

Nicely explained. Centering has another salutary effect you’ve alluded to but seem to have glossed.

In the conventional slope-intercept form of a linear regression model, the so-called ‘intercept’ parameter is calculated as b0^ = y-bar – b1^ * x-bar. This gives the appearance of being a meaningful parameter, but generally is not. The exception occurs when we’ve observed values of X near 0. If we attempt to interpret b0 as a meaningful parameter we are making the assumption that linearity holds down to X = 0.

Now, if we have some theory that says this ought to be the case, perhaps it’s okay to do so. But I’ll submit that it’s better to collect data near X = 0 as a check on theory. And in the case where we are doing empirical modeling there is no justification for the assumption.

If we center the regressor before fitting, the prediction model is y^_i = y-bar + b1^ * (x_i – x-bar). It is now clear what effect the so-called intercept has: it forces the regression line through the data centroid (x-bar, y-bar). We can’t misinterpret the intercept in this formulation of the model.