Previous Posts

Have you ever wondered whether you should report separate means for different groups or a pooled mean from the entire sample? This is a common scenario that comes up, for instance in deciding whether to separate by sex, region, observed treatment, et cetera.

Item Response Theory (IRT) refers to a family of statistical models for evaluating the design and scoring of psychometric tests, assessments and surveys. It is used on assessments in psychology, psychometrics, education, health studies, marketing, economics and social sciences — assessments that involve categorical items (e.g., Likert items).

How do you know when to use a time series and when to use a linear mixed model for longitudinal data? What’s the difference between repeated measures data and longitudinal?

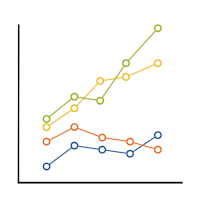

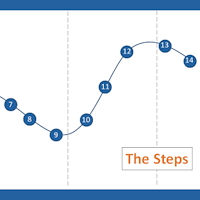

No matter what statistical model you’re running, you need to take the same steps. The order and the specifics of how you do each step will differ depending on the data and the type of model you use. These steps are in 4 phases. Most people think of only the third as modeling. But the […]

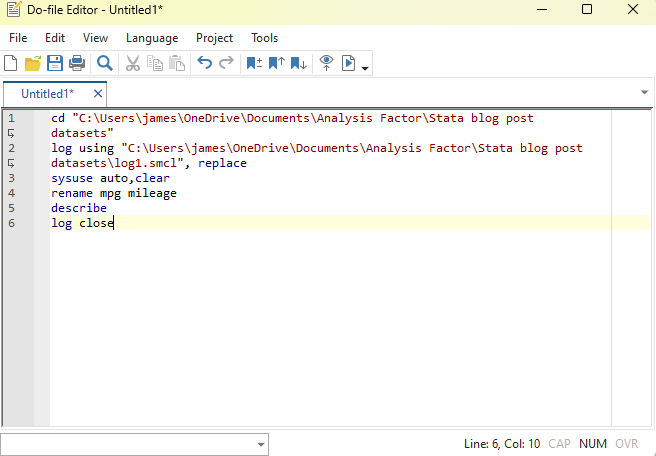

If you’ve tried coding in Stata, you may have found it strange. The syntax rules are straightforward, but different from what I’d expect. I had experience coding in Java and R before I ever used Stata. Because of this, I expected commands to be followed by parentheses, and for this to make it easy to […]

When analyzing longitudinal data, do you use regression or structural equation based approaches? There are many types of longitudinal data and different approaches to analyzing them. Two popular approaches are a regression based approach and a structural equation modeling based approach.

Ever consider skipping the important step of cleaning your data? It’s tempting but not a good idea. Why? It’s a bit like baking. I like to bake. There’s nothing nicer than a rainy Sunday with no plans, and a pantry full of supplies. I have done my shopping, and now it’s time to make the […]

Structural Equation Modeling (SEM) is a popular method to test hypothetical relationships between constructs in the social sciences. These constructs may be unobserved (a.k.a., “latent”) or observed (a.k.a., “manifest”). In this webinar, guest instructor Manolo Romero Escobar will describe the different types of SEM: confirmatory factor analysis, path analysis for manifest and latent variables, and latent growth modeling (i.e., the application of SEM on longitudinal data).

There are many designs that could be considered Repeated Measures design, and they all have one key feature: you measure the outcome variable for each subject on several occasions, treatments, or locations. Understanding this design is important for avoiding analysis mistakes. For example, you can’t treat multiple observations on the same subject as independent observations. […]

From our first Getting Started with Stata posts, you should be comfortable navigating the windows and menus of Stata. We can now get into programming in Stata with a do-file. Why Do-Files? A do-file is a Stata file that provides a list of commands to run. You can run an entire do-file at once, or […]

stat skill-building compass

stat skill-building compass