One activity in data analysis that can seem impossible is the quest to find the right analysis. I applaud the conscientiousness and integrity that  underlies this quest.

underlies this quest.

The problem: in many data situations there isn’t one right analysis.

Textbook examples show ideal situations and right analyses. They’re like cookbooks: first choose the recipe. Then shop for these specific ingredients and follow steps 1, 2, and 3 to get a specific meal.

Real Data Analysis

Real data analysis rarely fits that ideal.

Analyzing real data is more like getting a call from your in-laws, who are — surprise! — on the way over for dinner. You look into your refrigerator to find a turnip, swiss cheese, lentils, and pickles. Your challenge: make the best meal possible.

This is part of what makes data analysis so tough. There are so many contextual factors.

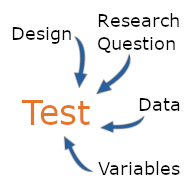

Your research question, design, types of variables, and data issues all need to come together to do the best analysis you have available to you.

Of course, if you’re at all involved with the planning and execution of the data collection (or the grocery shopping), you have a lot more control over what you have to work with. You can take the analysis into account when you plan the study instead of having to cobble it together later.

But that doesn’t mean you can still make everything cookbook-ideal. Sometimes you have to analyze secondary data and they are what they are. Sometimes there are ethical, resource, or other limitations in the way or type of data you can collect.

And often you do everything right and things don’t go as you expected.

It’s not wrong to run an imperfect analysis as long as you’re transparent about its weaknesses. It doesn’t mean there is a better analysis out there.

Your job is to do the best analysis you can based on what you have to work with.

An Example

Here’s an example that came up recently with one of our members. The dependent variable was in the form of a rate: number of sales per employee. It was highly skewed. The unit of analysis was the sales office.

It doesn’t look like it, but rates like this are count variables per unit of measurement: the number of sales per employee.

The ideal way to analyze these is with a count model—usually a Poisson or a negative binomial regression. Situations like this fit their assumptions. Count models assume a skewed distribution of Y|X and the higher variance at higher means. Further, they can include the number of employees as an exposure variable and will only give positive predicted values.

In other words, a well-constructed count model can answer the research question without bias or violations of assumptions.

The problem is that count models require the dependent variable to be a count (number of sales). We have to separate out the exposure variable (number of employees). The model will combine them into a rate.

But whoever collected these data combined these into a rate already. They didn’t keep the original variables. So the ideal analysis was just out of reach.

So here I suggested a log transformation and a linear model instead. It’s not ideal and had anyone asked before data collection, I would have advised keeping the original variables. But this approach should mitigate the worst issues with skew and non-constant variance. It can give a reasonable answer to the research question.

It’s important that the researcher describe in detail what she did and the possible biases and assumption violations this analysis introduces so that the reader can make their own inferences.

When There Isn’t One Right Analysis

So, to conclude:

1. If possible, plan out the data analysis before collecting the data. This will minimize, but not always eliminate, challenges. If you’re having trouble, get guidance at this point.

2. If an ideal analysis is available, by all means use it. Don’t shy away from it because you’re not familiar with it or because everyone in your field does something easier. That ideal analysis has the best chance of reaching your goal.

3. Your goal is to answer the research question accurately and communicate the results while meeting assumptions of your statistical tests.

4. Recognize that all real data sets have challenges, they rarely fit ideal situations, and your job is to weigh the consequences of the different challenges and limitations.

5. Meet all assumptions you can, try to minimize bias, and be transparent about where you couldn’t.

And finally, realize that sometimes flaws in the measurement, design, or the data themselves will render the research question unanswerable.

Leave a Reply