In our last post, we calculated Pearson and Spearman correlation coefficients in R and got a surprising result.

In our last post, we calculated Pearson and Spearman correlation coefficients in R and got a surprising result.

So let’s investigate the data a little more with a scatter plot.

We use the same version of the data set of tourists. We have data on tourists from different nations, their gender, number of children, and how much they spent on their trip.

Again we copy and paste the following array into R.

M <- structure(list(COUNTRY = structure(c(3L, 3L, 3L, 3L, 1L, 3L, 2L, 3L, 1L, 3L, 3L, 1L, 2L, 2L, 3L, 3L, 3L, 2L, 3L, 1L, 1L, 3L,

1L, 2L), .Label = c("AUS", "JAPAN", "USA"), class = "factor"),GENDER = structure(c(2L, 1L, 2L, 2L, 1L, 2L, 1L, 2L, 1L, 2L, 2L, 1L, 1L, 1L, 1L, 2L, 1L, 1L, 2L, 2L, 1L, 1L, 1L, 2L), .Label = c("F", "M"), class = "factor"), CHILDREN = c(2L, 1L, 3L, 2L, 2L, 3L, 1L, 0L, 1L, 0L, 1L, 2L, 2L, 1L, 1L, 1L, 0L, 2L, 1L, 2L, 4L, 2L, 5L, 1L), SPEND = c(8500L, 23000L, 4000L, 9800L, 2200L, 4800L, 12300L, 8000L, 7100L, 10000L, 7800L, 7100L, 7900L, 7000L, 14200L, 11000L, 7900L, 2300L, 7000L, 8800L, 7500L, 15300L, 8000L, 7900L)), .Names = c("COUNTRY", "GENDER", "CHILDREN", "SPEND"), class = "data.frame", row.names = c(NA, -24L))

M

attach(M)

plot(CHILDREN, SPEND)

(more…)

Let’s use R to explore bivariate relationships among variables.

Let’s use R to explore bivariate relationships among variables.

Part 7 of this series showed how to do a nice bivariate plot, but it’s also useful to have a correlation statistic.

We use a new version of the data set we used in Part 20 of tourists from different nations, their gender, and number of children. Here, we have a new variable – the amount of money they spend while on vacation.

First, if the data object (A) for the previous version of the tourists data set is present in your R workspace, it is a good idea to remove it because it has some of the same variable names as the data set that you are about to read in. We remove A as follows:

rm(A)

Removing the object A ensures no confusion between different data objects that contain variables with similar names.

Now copy and paste the following array into R.

M <- structure(list(COUNTRY = structure(c(3L, 3L, 3L, 3L, 1L, 3L, 2L, 3L, 1L, 3L, 3L, 1L, 2L, 2L, 3L, 3L, 3L, 2L, 3L, 1L, 1L, 3L,

1L, 2L), .Label = c("AUS", "JAPAN", "USA"), class = "factor"),GENDER = structure(c(2L, 1L, 2L, 2L, 1L, 2L, 1L, 2L, 1L, 2L, 2L, 1L, 1L, 1L, 1L, 2L, 1L, 1L, 2L, 2L, 1L, 1L, 1L, 2L), .Label = c("F", "M"), class = "factor"), CHILDREN = c(2L, 1L, 3L, 2L, 2L, 3L, 1L, 0L, 1L, 0L, 1L, 2L, 2L, 1L, 1L, 1L, 0L, 2L, 1L, 2L, 4L, 2L, 5L, 1L), SPEND = c(8500L, 23000L, 4000L, 9800L, 2200L, 4800L, 12300L, 8000L, 7100L, 10000L, 7800L, 7100L, 7900L, 7000L, 14200L, 11000L, 7900L, 2300L, 7000L, 8800L, 7500L, 15300L, 8000L, 7900L)), .Names = c("COUNTRY", "GENDER", "CHILDREN", "SPEND"), class = "data.frame", row.names = c(NA, -24L))

M

attach(M)

Do tourists with greater numbers of children spend more? Let’s calculate the correlation between CHILDREN and SPEND, using the cor() function.

R <- cor(CHILDREN, SPEND)

[1] -0.2612796

We have a weak correlation, but it’s negative! Tourists with a greater number of children tend to spend less rather than more!

(Even so, we’ll plot this in our next post to explore this unexpected finding).

We can round to any number of decimal places using the round() command.

round(R, 2)

[1] -0.26

The percentage of shared variance (100*r2) is:

100 * (R**2)

[1] 6.826704

To test whether your correlation coefficient differs from 0, use the cor.test() command.

cor.test(CHILDREN, SPEND)

Pearson's product-moment correlation

data: CHILDREN and SPEND

t = -1.2696, df = 22, p-value = 0.2175

alternative hypothesis: true correlation is not equal to 0

95 percent confidence interval:

-0.6012997 0.1588609

sample estimates:

cor

-0.2612796

The cor.test() command returns the correlation coefficient, but also gives the p-value for the correlation. In this case, we see that the correlation is not significantly different from 0 (p is approximately 0.22).

Of course we have only a few values of the variable CHILDREN, and this fact will influence the correlation. Just how many values of CHILDREN do we have? Can we use the levels() command directly? (Recall that the term “level” has a few meanings in statistics, once of which is the values of a categorical variable, aka “factor“).

levels(CHILDREN)

NULL

R does not recognize CHILDREN as a factor. In order to use the levels() command, we must turn CHILDREN into a factor temporarily, using as.factor().

levels(as.factor(CHILDREN))

[1] "0" "1" "2" "3" "4" "5"

So we have six levels of CHILDREN. CHILDREN is a discrete variable without many values, so a Spearman correlation can be a better option. Let’s see how to implement a Spearman correlation:

cor(CHILDREN, SPEND, method ="spearman")

[1] -0.3116905

We have obtained a similar but slightly different correlation coefficient estimate because the Spearman correlation is indeed calculated differently than the Pearson.

Why not plot the data? We will do so in our next post.

About the Author: David Lillis has taught R to many researchers and statisticians. His company, Sigma Statistics and Research Limited, provides both on-line instruction and face-to-face workshops on R, and coding services in R. David holds a doctorate in applied statistics.

See our full R Tutorial Series and other blog posts regarding R programming.

I mentioned in my last post that R Commander can do a LOT of data manipulation, data analyses, and graphs in R without you ever having to program anything.

I mentioned in my last post that R Commander can do a LOT of data manipulation, data analyses, and graphs in R without you ever having to program anything.

Here I want to give you some examples, so you can see how truly useful this is.

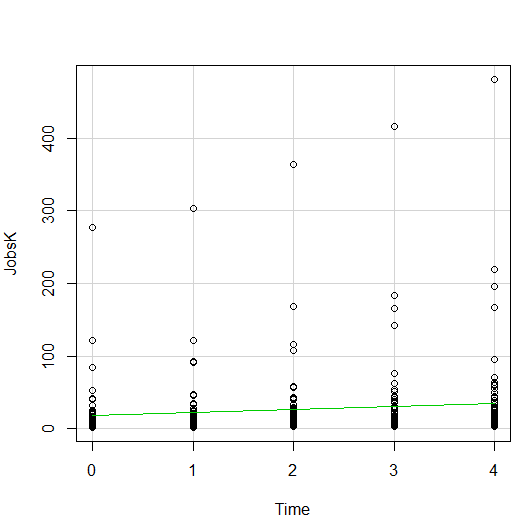

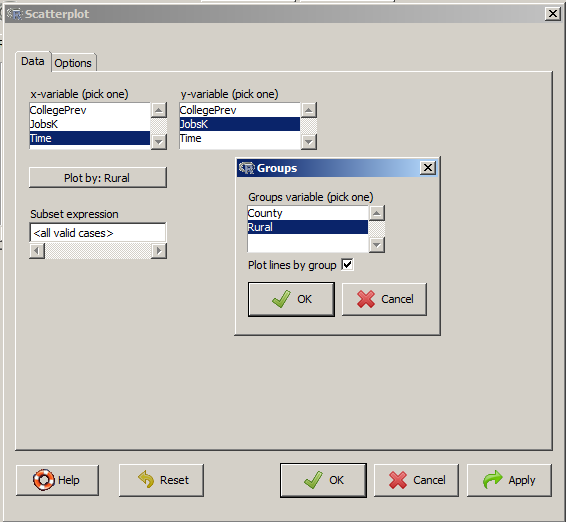

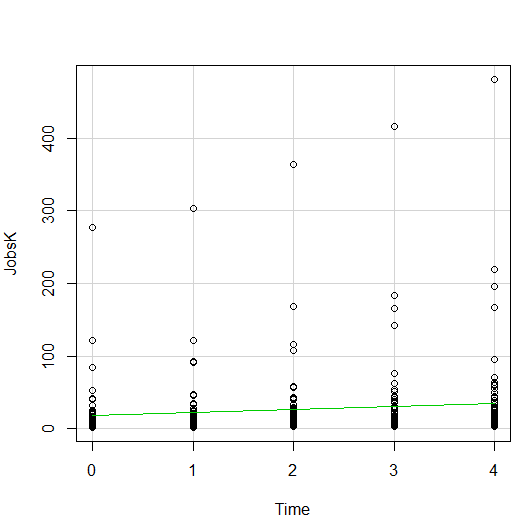

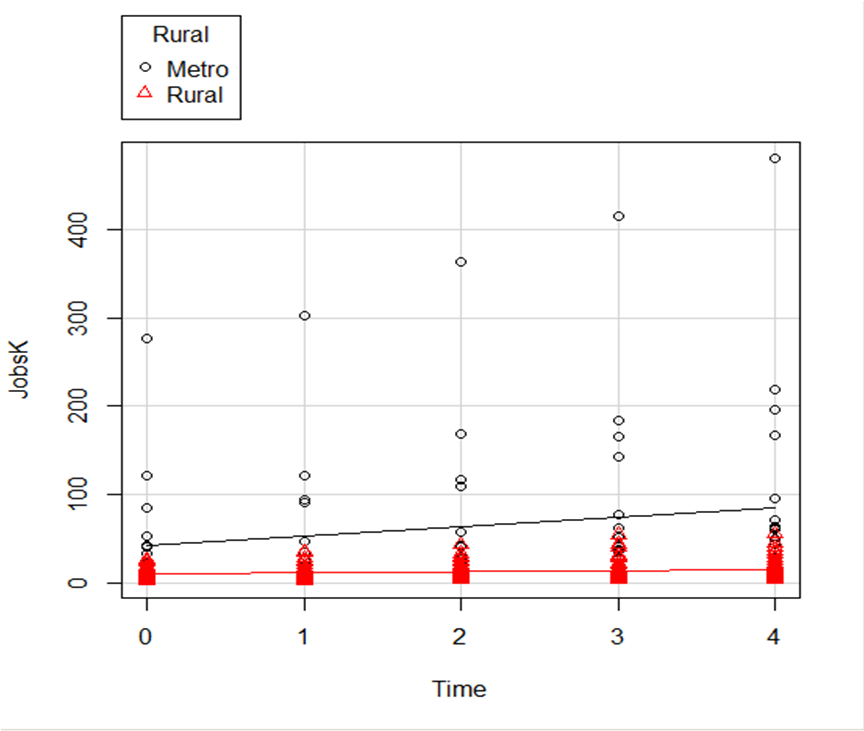

Let’s start with a simple scatter plot between Time and the number of Jobs (in thousands) in 67 counties. Time is measured in decades since 1960.

The green line is the best fit linear regression line.

This wasn’t the default in R Commander (I actually had to remove a few things to get to this), but it’s a useful way to start out.

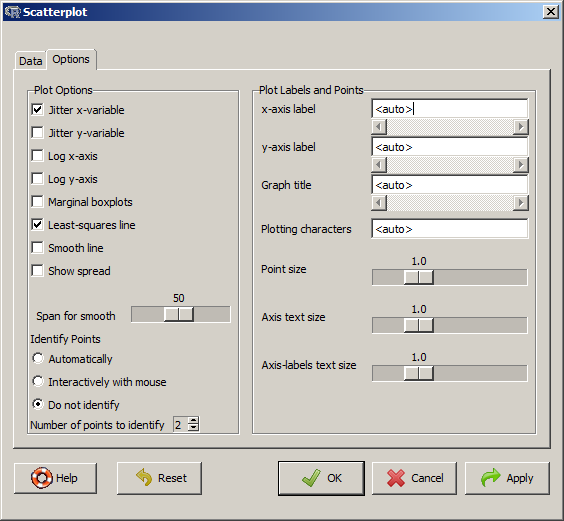

A few ways we can easily customize this graph:

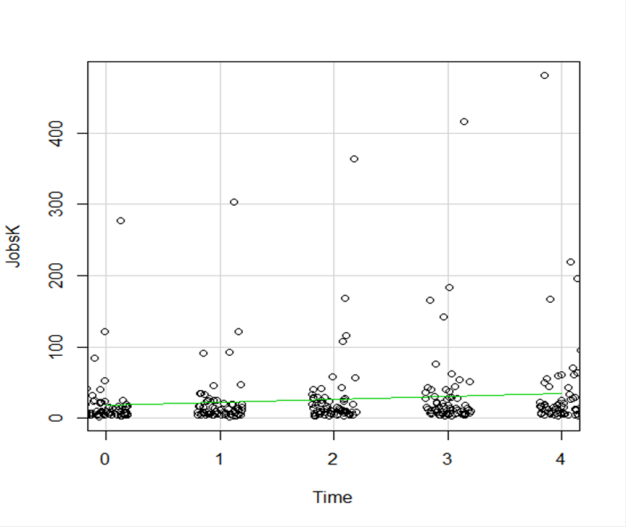

Jittering

We see here a common issue in scatter plots–because the X values are discrete, the points are all on top of each other.

It’s difficult to tell just how many points there are at the bottom of the graph–it’s just a mass of black.

One great way to solve this is by jittering the points.

All this means is that instead of putting identical points right on top of each other, we move it slightly, randomly, in either one or both directions. In this example, I jittered only horizontally:

So while the points aren’t graphed exactly where they are, we can see the trends and we can now see how many points there are in each decade.

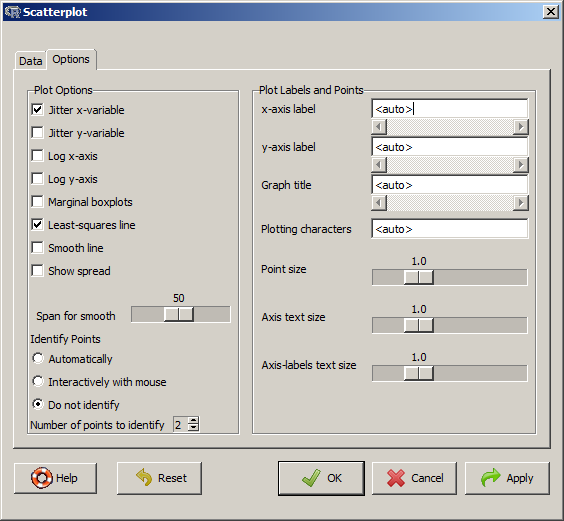

How hard is this to do in R Commander? One click:

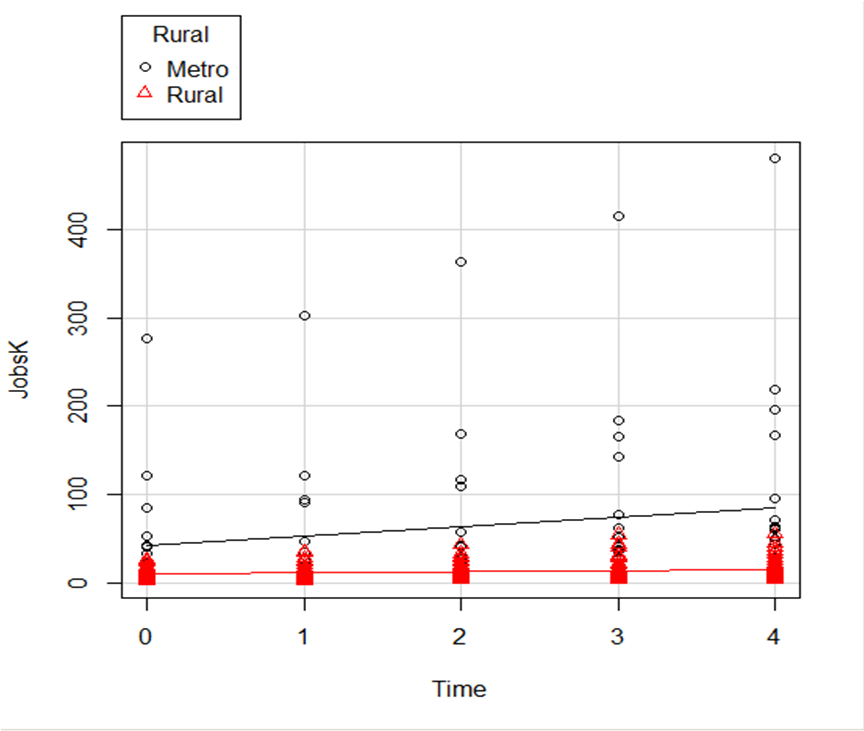

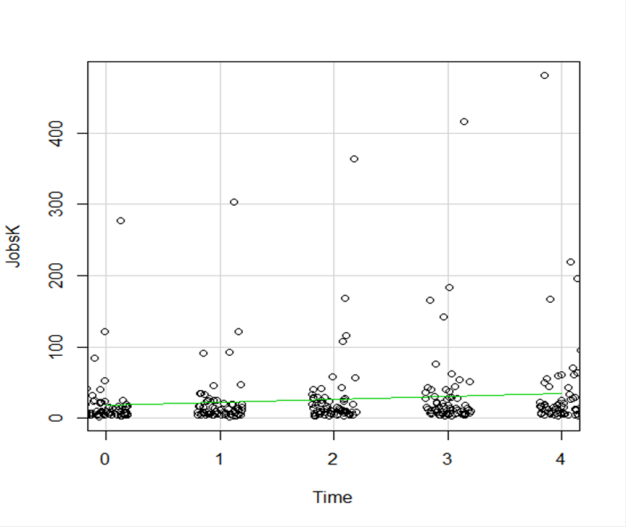

Regression Lines by Group

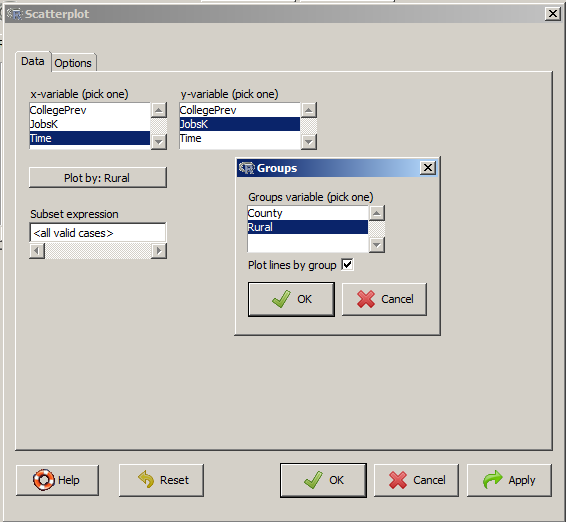

Another useful change to a scatter plot is to add a separate regression line to the graph based on some sort of factor in the data set.

In this example, the observations are measured for counties and each county is classified as being either Rural or Metropolitan.

If we’d like to see if the growth in jobs over time is different in Rural and Metropolitan counties, we need a separate line for each group.

In R Commander we can do this quite easily. Not only do we get two regression lines, but each point is clearly designated as being from either a Rural or Metropolitan county through its color and shape.

It’s quite clear that not only was there more growth in the number of jobs in Metro counties, there was almost no change at all in the Rural counties.

And once again, how difficult is this? This time, two clicks.

And once again, how difficult is this? This time, two clicks.

There are quite a few modifications you can make just using the buttons, but of course, R Commander doesn’t do everything.

For example, I could not figure out how to change those red triangles to green rectangles through the menus.

But that’s the best part about R Commander. It works very much like the Paste button in SPSS.

Meaning, it creates the code for you. So I can take the code it created, then edit it to get my graph looking the way I want.

I don’t have to memorize which command creates a scatter plot.

I don’t have to memorize how to pull my SPSS data into R or tell R that Rural is a factor. I can do all that through R Commander, then just look up the option to change the color and shape of the red triangles.

with a mean of zero and constant variance.

with a mean of zero and constant variance.